See multimethod-experiments for details.

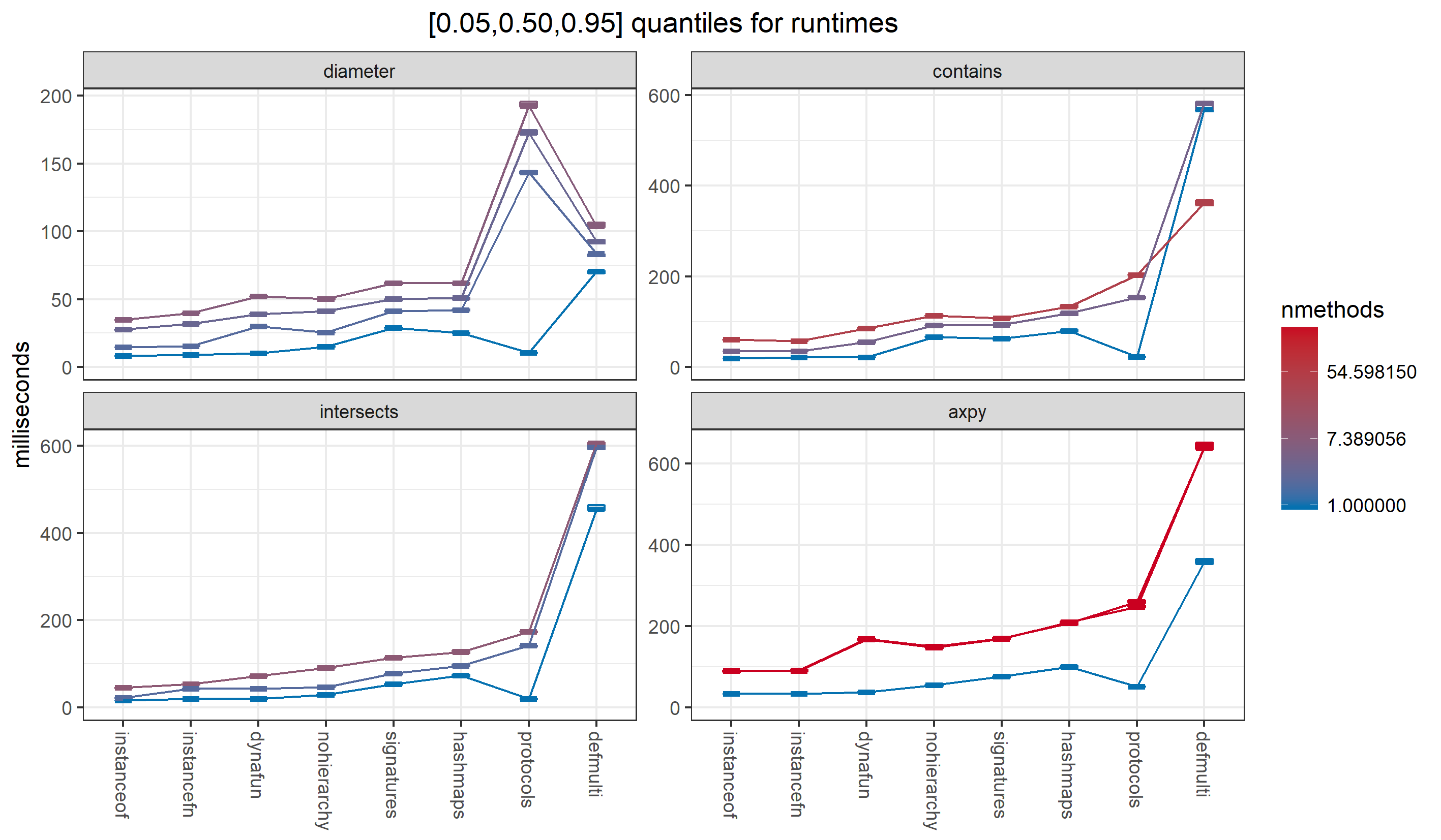

Runtimes for various dynamic method lookup algorithms:

-

hashmaps: (faster-multimethods) Clojure 1.8.0 behavior except for 'bug' fixes. Replaces persistent hashmaps with Java hashmaps. (See changes for details.) -

signatures: (faster-multimethods) uses specializedSignaturedispatch values in place of persistent vectors. -

nohierarchy: (faster-multimethods) optimizes for pure class-based dispatch. -

defmulti: Clojure 1.8.0 -

protocols: Clojure 1.8.0defprotocol, with hand-optimized if-then-elseinstance?calls to look up the correct method based on all arguments. -

instanceof: hand optimized if-then-else Java method lookup; -

instancefn: same asinstanceofbut implemented in Clojure and invoking Clojure functions rather than Java methods. -

dynafun: an experimental, incomplete library abandoning consistency with Clojure 1.8.0. No hierarchies; pure class-based method definition and lookup. For small arities, the current version does linear search in nested arrays, and avoids allocating and reclaiming dispatch values.

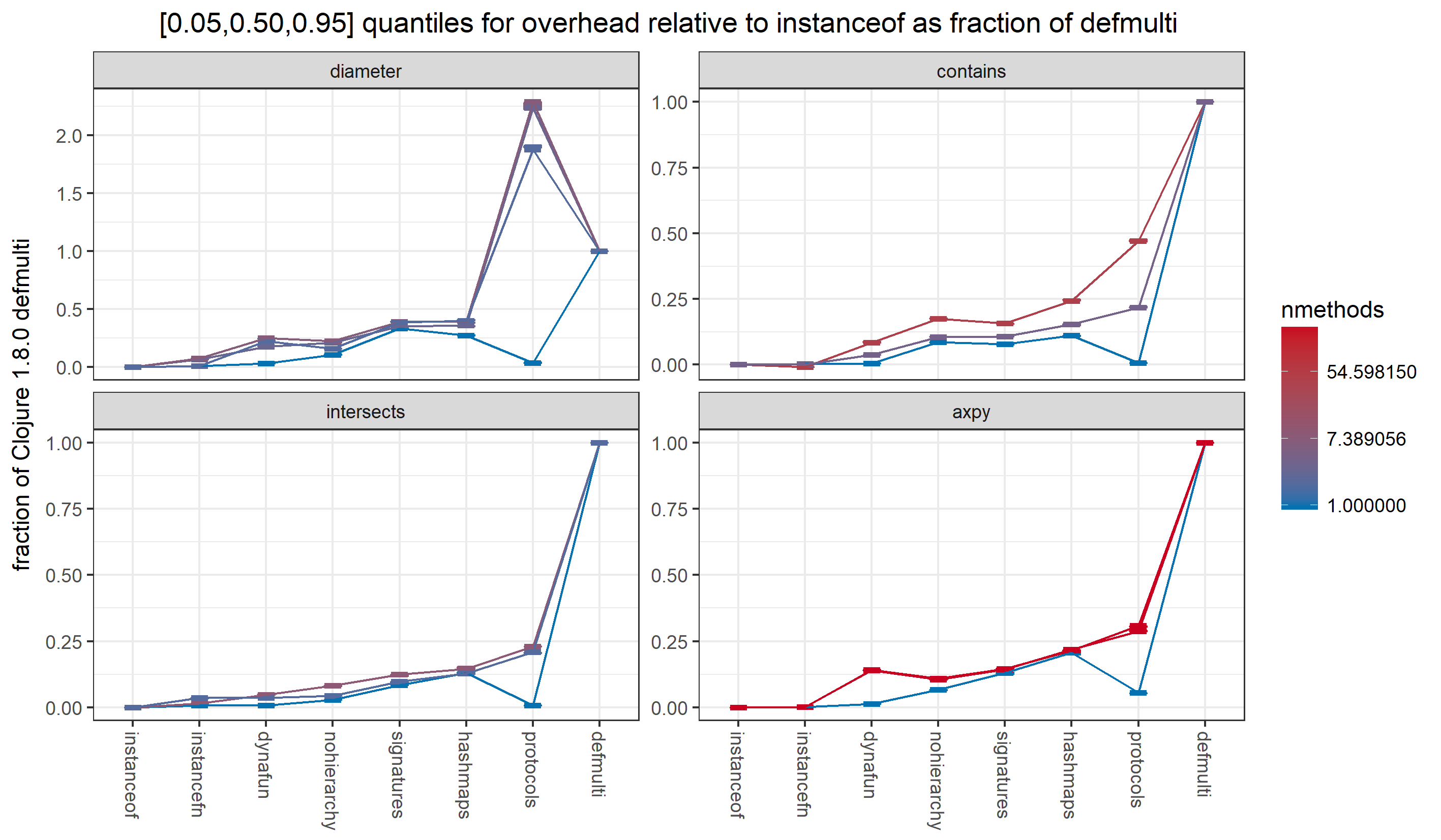

Overhead, taking the hand optimized Java if-then-else instanceof

algorithm as the baseline, as a fraction of the overhead of

Clojure 1.8.0 defmulti:

Note that faster-multimethods

outperforms Clojure 1.8.0 protocols except in the case of repeated calls

to a single method (the lowest curve in each plot).

When restricted to pure class-based dispatch (nohierarchy),

faster-multimethods

is close even in the single repeated method case,

while being fully dynamic (unlike protocols, which are only dynamic

for the first this argument).

I don't understand why protocols so much worse than everything

else for the diameter benchmark. That is a single argument

multimethod (this only) and ought to be an easy case for protocols.

A caveat: These benchmarks are measured after a lot of warmup, giving HotSpot plenty of time to optimize what it can. Results might be very different in scenarios where the methods are not called as often.