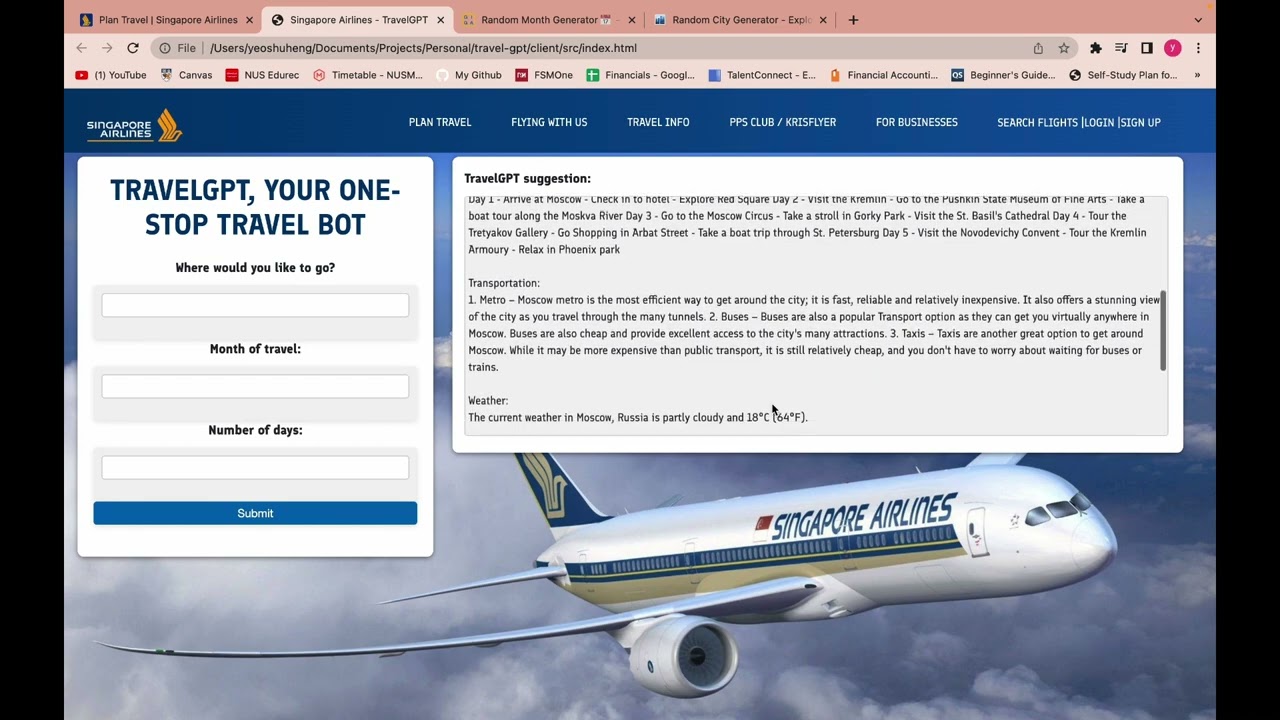

Your one-stop travel buddy app build for the lazy tourists. Build for SIA Application Challenge under the Generative AI category.

- Introduction

- Demo

- Tools

- Project Structure

- Getting Started

- Technology Choices

- Testing & Optimization

- Credits

Currently travel guides on SIA websites are lacking in locations, with very little information on them. It is however unrealistic for SIA to be able to provide handy travel guides for every location. As tourists, it is also very cumbersome to gather information across various sources, hence we wanted a one-stop solution for everything from itinerary recommendations to weather forecasts. With that, we have decided to leverage on the growing trend of free to use LLMs such as chatGPT to help tourists generate recommendations and gather related information.

- Frontend: JavaScript, HTML, CSS

- Backend: Python, Flask, OpenAI API, LangChain

- Database: Redis

- Tooling: Docker, Postman

.

├── client

│ ├── images

│ ├── src

│ ├── styles

│ └── app.js

├── config

│ └── config.json # Put OpenAI API key here

├── launch

│ ├── launch.bat # For Windows

│ └── launch.sh # For Unix

└── server

├── database

├── chat

└── main.py- Clone this repository

git clone https://github.com/yeoshuheng/travel-gpt.git- Create OpenAI account by visiting the OpenAI API page.

- Create a API key Settings>User>API Key and copy it.

- Go into the travel-gpt/config/config.json and add your API Key.

- Install Docker

- Run Docker

- Run application

MacOS

For MacOS, you will need to first give Google Chrome Full Disk Access, you can do so by going into System Preferences > Privacy & Security > Full Disk Access and adding Google Chrome.

Afterwards, cd into the project directory's launch file and run:The application should open, wait for a minute for the docker build to complete and you can now enjoy the app.bash launch.sh

Windows

For Windows, cd into the project directory's launch file and run:The application should open, wait for a minute for the docker build to complete and you can now enjoy the app.start launch.bat

Manual

You can also manually start the app. Cd into the project directory and run:Afterwards, you can just open ./client/src/index.html and enjoy the application.docker compose up --build

- While LangChain is available on both JS and Python, we eventually decided to adopt the Python version because Python is traditionally used to work with data, hence we felt that it would provide more flexibility if we eventually decide to add more data-enabled features in the future or if we decide to use a different model and finetune it with transfer learning.

- Since we were already working in Python, we also decided to use Flask for our backend.

- A future consideration could possibly be to shift the backend's code into Mojo, a pythonic language that runs with the performance of C++. Additionally, Mojo also plans to support popular Python data manipulation packages and is already supporting MatplotLib & NumPy.

- Redis is easy to set up and handles queries more efficiently than SQL. Since we do not have any complex queries within our application that requires SQL, we decided upon Redis as our caching database. however this may change as the app evolves and more functionally is developed.

- We eventually decided to Dockerize the application to ease up application deployment, be it on our local devices or in the cloud.

- A issue we noticed is the long loading time in between user input and querying, this is because of the need for the LLM to generate the response. We decided to optimise this by caching standard responses (eg. Description of travel location) which does not change into a Redis database.

- The API for the Flask server has been tested on Postman.

These are some tutorials we used when setting up the application that were of amazing help.

- Salvador Villalon's FreeCodeCamp article on building applications in Flask

- Paul Issack's explanation on Dockerfiles

- Official Documentation for LangChain

- Official Documentation for Docker Compose

- Official Documentation for OpenAI API